Canary Testing Your Backend API on AWS

How canary testing can help you safely validate changes to your APIs in production.

No matter how much you test a new version of your API before release, the true test occurs when you finally put it in front of customers. Problems that you never detected or foresaw can unexpectedly rear their ugly heads. In this article, I talk about how you can use canary testing to vet your release in production.

What is Canary Testing?

In canary testing, your team rolls out a new version of an application only to a small percentage of your user base. The phrase comes the expression "canary in a coal mine". It harkens back to the days when miners would lower a canary down into a mine shaft to verify it wasn't teeming with toxic gases such as carbon monoxide.

Fortunately, software canary testing never harms any actual canaries. With software canary testing, you keep both the existing and the new versions of your software running simultaneously. You then divert only a small portion of users - e.g., 1% - to the new code and monitor its performance.

If your canary testing doesn't produce errors, you can expand it to increasingly larger percentages of your user base. Eventually, 100% of your users will be on the new version. If the new software has some sort of defect - e.g., it throws HTTP 500 server errors, or performance is severely degraded - you can roll back to the existing version. You can then attempt another canary test after your team addresses the issues it discovered.

Canary Testing vs. Blue-Green Deployments

Canary testing may sound similar to the concept of blue-green deployments. But there's an important difference.

In a blue-green deployment, you stand up a completely new version of your entire architecture. You then slowly route users from the old version to the new version.

By contrast, in canary testing, you don't re-provision the entire application. Instead, you steadily replace the application nodes that your users access. This can mean provisioning new virtual machines with the new code, or preparing a new Docker container with the code changes.

Blue-green is a more straightforward pattern to implement. However, it also requires standing up a duplicate version of your entire architecture. Some teams might find this cost-prohibitive. Canary testing gives many of the same benefits as blue-green while conserving cloud resources and keeping costs low.

Who Gets the New Version?

So how do you decide which users get the new version of a canary? Teams that implement the canary pattern use a wide range of criteria. Some include:

- Random sampling of users

- Internal company users

- Trusted partners/integrators

- Users in a specific geographic region

- Users who have explicitly signed up to test-drive new releases

Backend APIs on AWS

For this article, I define a "backend API" as an Application Programming Interface that developers use to access server-side resources (e.g., databases, file stores) and implement server-side application logic.

Canary deployments can be an effective way to deploy non-breaking changes to a backend API. If your team has made significant changes that alter an existing API call's method signature, that's a breaking change. In that case, you will likely want to create a separate API endpoint for the new version. Callers can then alter and test their code against the new version before releasing their own changes.

However, if you are altering code without altering any method's signatures, a canary deployment can be a great way to roll out changes slowly. You can expose the revised API to an increasing percentage of callers steadily. This kind of careful rollout is good for backend APIs on which a large number of internal and external applications depend. By exposing only a limited number of initial callers to the new code, you reduce the risk of creating a systemwide outage with downstream effects.

Implementing Canary Testing with Docker Containers

As I've discussed before, there are numerous ways to host applications on AWS. At TinyStacks, we're huge fans of Docker containers.

Docker provides a convenient, lightweight way to package your code together with all of its dependencies. Once you've converted your app into a Docker container, you can run it on any cloud provider and service that supports Docker.

Docker also makes it easy to deploy changes to your backend API. You can release changes simply by building and releasing a new version of your container. You can then implement canary deployments by slowly routing a percentage of existing traffic to the new running container.

There are multiple ways on AWS to conduct a canary deployment. Below are two of the most common and well-documented. Both assume that you have previously deployed your Docker container on an Amazon Elastic Container Service (ECS) cluster. (If you've never done that before, check out Francesco Ciulla's tutorial!)

Canary Support in AWS API Gateway

I've talked before about API Gateway, which provides a front-end to APIs hosted on various AWS services. Using API Gateway enables you to leverage the service's numerous API management features, such as authentication, rate throttling, and stage deployment management.

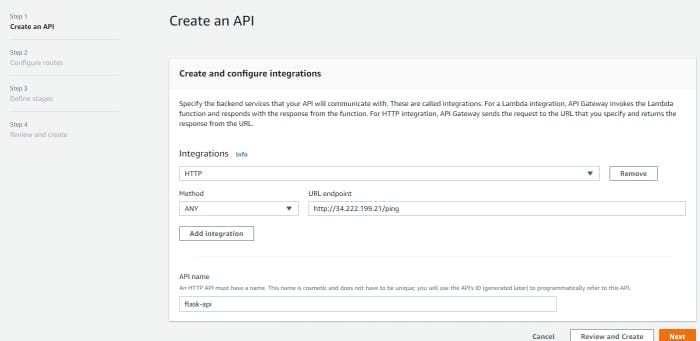

You can easily use API Gateway as an entry point to your Docker-hosted API on ECS. Once your API is running on ECS, you can use the HTTP Proxy integration type to map API Gateway routes to the routes exposed by your backend API.

Even better, API Gateway directly supports canary deployments. API Gateway supports canaries via its support for deployment stages. As I've discussed before, a deployment stage is a full deployment of your application that supports a particular purpose - e.g., development, testing, preview, or production use.

To support a canary deployment, you add a canary stage to a current stage (e.g., production) of your API Gateway deployment. You can configure the canary to direct a certain percentage of traffic away from the current stage to the canary. Then, you can map the routes from the canary to a new running Docker container containing your current changes. Your canary's logs will be sent to separate Amazon CloudWatch Logs streams so that you can monitor the output from the canary separate from the main application.

The upside to API Gateway's canary support is that it's easy. Once you've set up API Gateway, adding a canary stage is straightforward. Another upside is that you can use this strategy no matter how you host your API. For example, if you choose to use AWS Lambda to implement your API rather than Docker containers, you can front your Lambda API using API Gateway and leverage its canary feature to onboard changes.

The downside is that API Gateway's canary feature only supports a random traffic strategy. In other words, if you want to preview your canary using different criteria - e.g., only exposing the canary to internal users, or exposing it to early adapters - you'll need to devise a different strategy.

Canary with ECS and AWS CodeDeploy

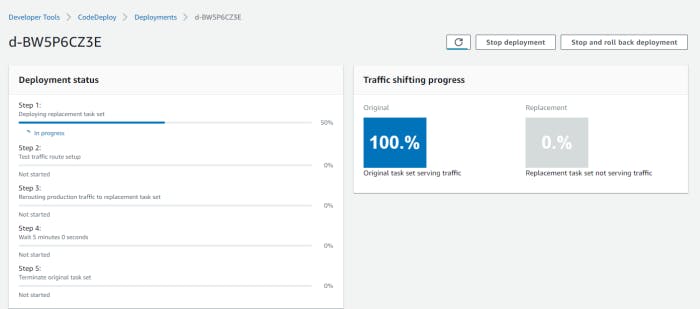

AWS CodeDeploy is an AWS technology that enables automatic deployment and updating of applications. Originally designed to deploy applications to Amazon EC2 instances, CodeDeploy now also supports ECS deployments. Using CodeDeploy, you can execute a full blue-green deployment, in which you stand up a new (green) deployment of your application alongside the old (blue) deployment.

You can also leverage the same technology used for blue-green deployments to support canary deployments on ECS with CodeDeploy. After standing up your second environment, CodeDeploy uses weighted target groups in Application Load Balancer to shift over traffic gradually to your new stack.

The CodeDeploy approach is a little more involved than the API Gateway approach, as it requires understanding and implementing CodeDeploy deployments. However, once set up, it provides an easy way to perform an automated canary deployment that shifts traffic gradually over from your old Docker image to your new one.

Monitoring the Canary Test

Canary testing assumes you already have excellent monitoring in place. Without a good monitoring strategy and implementation, there's no way to tell if your canary is functioning, erroring out, or returning a mix of successes and errors ( "Schrödinger's canary", if you will.)

Your monitoring should focus on the same metrics you'd typically monitor: HTTP errors, application errors (exceptions, etc.), response times, load, latency, etc. However, you'll need to ensure that you can separate the metrics from your canary from the metrics of your existing production application. You may want to look into commercial tracing tools, such as DynaTrace, that support canary testing as a first-class feature.

Conclusion

Canary testing can help validate that the changes you've made to your backend API are truly ready to go. By redirecting only a percentage of traffic to new code at a time, you can monitor changes to verify they're performing correctly - and limit your blast radius should anything go wrong.

Header image: Shutterstock (used under license by the author)