Migrating from DigitalOcean to AWS: Converting Spaces to S3

Why would you migrate off of DigitalOcean Spaces to Amazon S3 - and how? We cover the reasons plus offer a step-by-step guide.

If you're just starting your journey in the cloud, Platform as a Service (PaaS) systems like Heroku and DigitalOcean can seem tantalizing. However, as your business grows, their limitations become more and more clear.

In this article, I'll discuss DigitalOcean Spaces, the company's storage solution, and why so many companies quickly outgrow it. I'll also talk about how TinyStacks can act as a better bridge to the cloud. Finally, I'll look at how to bring your storage off of Spaces and into your own AWS account.

DigitalOcean Spaces vs Amazon S3

Like Heroku, DigitalOcean aims to provide an easier onramp to the cloud. It's a service aimed at customers who are overwhelmed by the complexity of cloud providers such as AWS.

With DigitalOcean, you can launch your application in a fraction of the time it would require on AWS. You can also leverage multiple DigitalOcean services to support application hosting. One of those is Digital Ocean Spaces.

Built on the object storage system Ceph, Spaces provides a competitive storage alternative to Amazon's S3 file storage service. The base Spaces plan charges a flat $5/month for up to 250GiB of storage and up to 1TiB of data transfer out.

Customers can use Spaces for a host of static data storage needs. These range from hosting static Web site assets (JavaScript files, images, etc.) to hosting large file downloads (installable applications, data sets).

Spaces can represent a nice cost saving over Amazon S3, where only the first GiB of data transfer to the Internet is free. And since Spaces is fully S3-compatible, SDK code that works with S3 will work with a Spaces account.

As another plus, Spaces offers a Content Delivery Network (CDN) at no additional cost. This means that content will be served to your customers from a regional endpoint closest to their own geographical location. That means better load times for Web pages and other static assets.

Amazon S3

It may go without saying but S3 is AWS's own file storage solution. For many software engineers, it's their go-to solution for cloud file storage - and for good reason.

Spaces covers the same use cases that S3 does. However, S3 provides a level of performance and scalability that few other services can match.

S3 also provides a number of features you won't find in DigitalOcean Spaces. These include, but aren't limited to:

- An array of storage types that enable you to minimize storage costs by specifying the availability level of your data

- A number of options for transferring large volumes of data into S3, including via secure physical storage devices

- Features like S3 Select that enable query-in-place so you can drive complex Machine Learning and AI algorithms without ever transferring your data out of S3

The downsides of DigitalOcean Spaces

Beyond the feature gap, DigitalOcean's offering has some severe limitations when compared to AWS. Some of these might not be apparent or relevant when your team is just ramping up. As the size and scale of your application grows, however, these may become more pressing.

Some of these limitations include:

Data proximity. DigitalOcean only supports five regional endpoints. By contrast, as of this writing, AWS supports 24. This limits your options when it comes to keeping data close to your customers.

Data residency. The regional issue is even worse if you need to do business in a country with data residency requirements. If you have to keep customer's data in the same region where they reside, then Spaces may be a no-go from the get-go.

Data security. You may not want to transmit sensitive data over the public Internet. Even if it's encrypted in transit, there's a non-zero possibility someone could exploit a security hole and gain access to it. If you use AWS instead, you can leverage services like AWS PrivateLink for S3 to guard against such man-in-the-middle attacks.

Speed and scalability. Given the greater breadth of regions and endpoints in AWS, your application may run a lot faster on AWS depending on your scenario. This is particularly true if your application will process a high volume of storage transactions. A DigitalOcean Space is limited to 240 operations per second. All Spaces in your account are limited to a total of 750 operations. By contrast, a single S3 bucket can process up to 3,500 operations per second per prefix (file path).

Pricing. In the long run, PaaS solutions tend to run much more expensive than the equivalent AWS functionality. See our analysis on Heroku PostgreSQL vs. Amazon RDS for an example.

The TinyStacks advantage: Easy deployment on AWS

The truth is, you'll always run into limitations like this with a Platform as a Service system like DigitalOcean or Heroku. That's because they make cloud deployment easy by limiting your options. PaaS systems offer a simplified, scaled-down cloud feature set that's easy to use but, ultimately, restricted in functionality.

At TinyStacks, we take a different approach. Our system enables you to take your code to the cloud in minutes. We do all of this on top of an AWS account that you own and manage.

With the TinyStacks approach, you get the best of both worlds. Your company's teams can launch secure, stable application stacks on AWS regardless of their cloud or DevOps knowledge. As their comfort with the cloud grows, they can add in other AWS services easily.

If you're using DigitalOcean currently (or thinking of using it), you can try out TinyStacks and try it yourself!

Moving from Spaces to Amazon S3

So let's say you've decided to make the leap from DigitalOcean to Amazon. Maybe you're using TinyStacks, or you've decided to migrate directly to AWS. How do you get your data off of Spaces and onto Amazon S3?

Fortunately, the Rclone tool makes this easy. Rclone is a self-described Swiss army knife for storage that supports over 40 different cloud storage products and storage protocols.

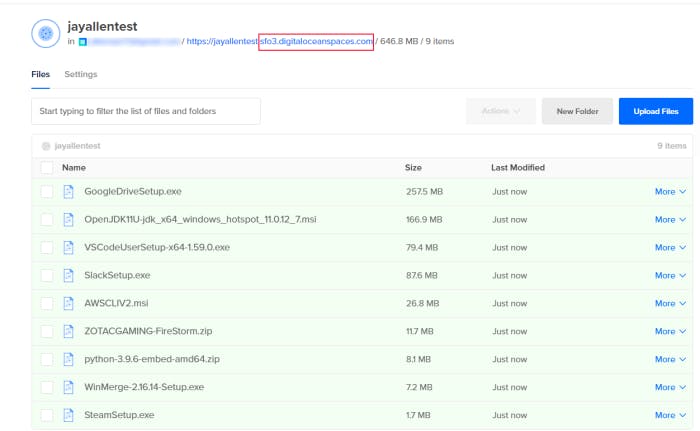

Let's walk through this to show just how easy it is. For this walkthrough, I've created a Space on DigitalOcean that contains some random binary files.

We'll want to transfer this into an Amazon S3 bucket in our AWS account. I've created the following bucket for this purpose:

Installing AWS CLI and Rclone

Rclone will make use of the AWS CLI. If you don't have it installed, install and configure it with an access key and secret key that has access to your AWS account.

You'll also need to install Rclone. On Linux/Mac/BSD systems, you can run the following command:

curl https://rclone.org/install.sh | sudo bash

Or, you can install using Homebrew:

brew install rclone

On Windows systems, download and install the appropriate executable from the Rclone site. Make sure to add rclone to your system's PATH afterward so that the subsequent commands in this tutorial work.

Obtaining your Spaces connection information

To use Rclone to perform the copy, you'll need to create an rclone.conf file that enables Rclone to connect to both your AWS S3 bucket and to your Spaces space.

If you've set up your AWS CLI, you already have your access key and secret key for AWS. You will need two pieces of information from Spaces:

- The URL to the endpoint for your Space; and

- An access key and secret key from DigitalOcean provides access to your Space.

Obtaining your Spaces endpoint is easy: just navigate to your Space in DigitalOcean, where you'll see the URL for your Space. The endpoint you'll use is the regional endpoint without the name of your space (the part highlighted in the red rectangle below):

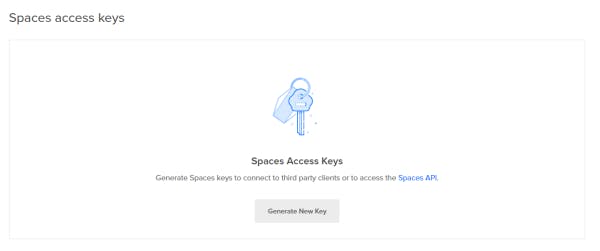

To create an access key and secret key for Spaces, navigate to the API page on DigitalOcean. Underneath the section Spaces access keys, click Generate New Key.

Give your key a name and then click the blue checkmark next to the name field.

Spaces will generate an access key and a secret token for you, both listed under the column Key. (The actual values in the screenshot below have been blurred for security reasons.) Leave this screen as is - you'll be using these values in just a minute.

Configure rclone.conf file and perform copy

Now you need to tell Rclone how to connect to each of the services. To do this, create an rclone.conf file in ~/.config/rclone/rclone.conf (Linux/Mac/BSD) or in C:\Users\<username>\AppData\Roaming\rclone\rclone.conf (Windows). The file should use the following format:

[s3]

type = s3

env_auth = false

access_key_id = AWS_ACCESS_KEY

secret_access_key = AWS_SECRET

region = us-west-2

acl = private

[spaces]

type = s3

env_auth = false

access_key_id = SPACES_ACCESS_KEY

secret_access_key = SPACES_SECRET

endpoint = sfo3.digitaloceanspaces.com

acl = private

Replace AWS_ACCESS_KEY and AWS_SECRET with your AWS credentials, and SPACES_ACCESS_KEY and SPACES_SECRET with your Spaces credentials. Also make sure that:

s3.regionlists the correct region for the bucket you plan to copy data into;spaces.endpointis pointing to the correct Spaces region.

To test your connection to Amazon S3, save this file and, at a command prompt, type:

rclone lsd s3:

If you configured everything correctly, you should see a list of your Amazon S3 buckets.

Next, test your connection to Spaces with the following command:

rclone lsd spaces:

You should see a list of all Spaces you have created in that region.

If everything checks out, go ahead and copy all of your data from Spaces to Amazon S3 using the following command:

rclone sync spaces:jayallentest s3:jayallen-spaces-test

(Make sure to replace the Space name and S3 bucket name with the values appropriate to your accounts.)

The Rclone command line won't give you any direct feedback even if the operation is successful. However, once it returns, you should see all of the data from your Spaces account now located in your Amazon S3 bucket:

And that's it! With just a little setup and configuration, you can now easily transfer data from DigitalOcean Spaces to Amazon S3.

Next steps

Sign up for TinyStacks - deploy your code to the cloud in minutes

Learn why we need a better solution to app deployment than Heroku 2.0